Implications of H20 coming back

Short post

The U.S. government has reversed its April ban on sales of Nvidia’s H20 and AMD’s MI308 AI chips, re-authorizing exports after striking a rare-earth minerals agreement with Beijing.

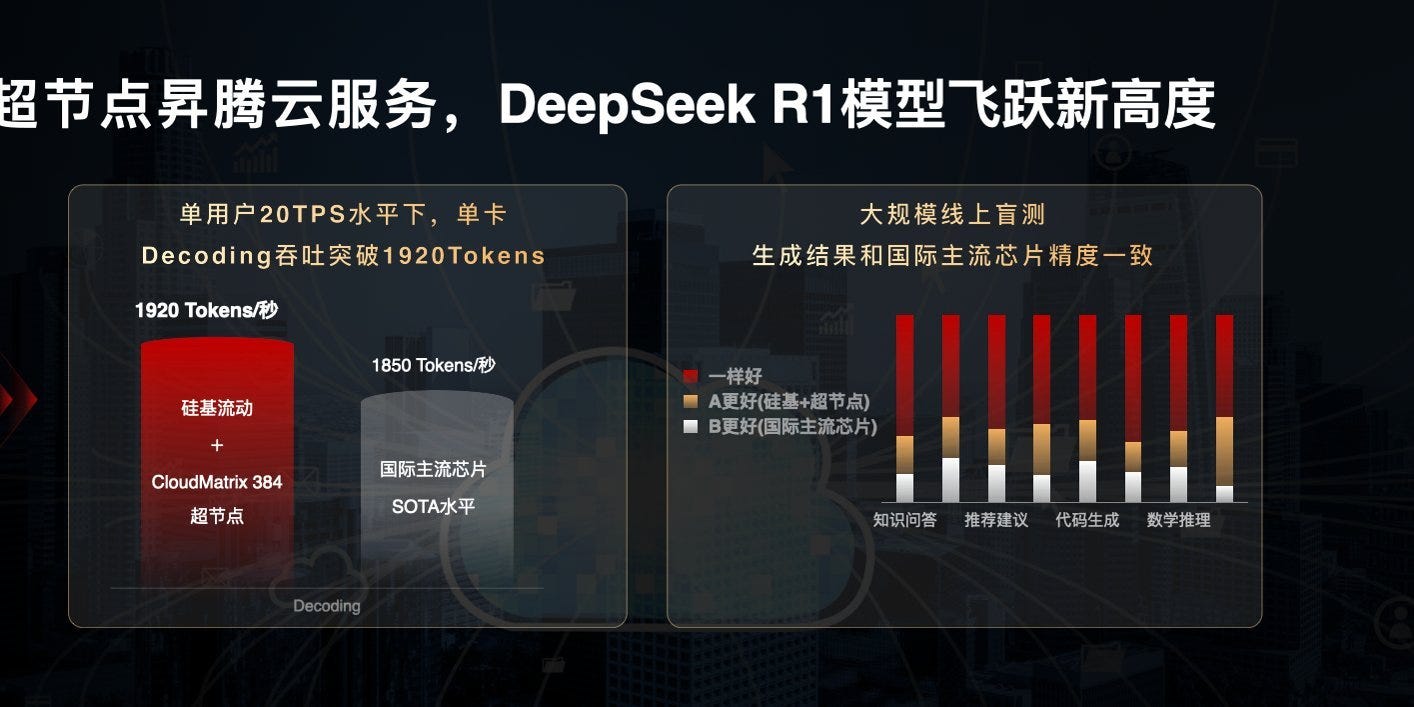

The resumption of H20 exports will reshape China’s AI landscape. Huawei’s Ascend 910C still outperforms the H20 for training workloads, yet the H20’s 4 Tb/s memory bandwidth makes it the best choice available for inference in the Chinese market. Nvidia announced H20E with 144 GB of HBM3E in January, and the company is sitting on 800K–1M H20/H20E that it can ship to China the moment export licenses are granted.

Nvidia doesn’t plan to restart the production of H20s after the inventory is sold off. They are planning to introduce a new chip, B20, for the Chinese market. It will be based on the Blackwell architecture and will likely have higher memory bandwidth than H20.

Implications for the Chinese GPU Market

H20s and its successor coming back to China will have a negative impact on Chinese GPU manufacturers. Chinese manufacturers can’t match H20 as the exports of HBM3/3E are banned to China, and CXMT can’t mass produce HBM3E before 2027. Without HBM3E, the memory bandwidth of Chinese GPUs will always trail Nvidia, and thus inference will be slower. Chinese GPU manufacturers and startups lack access to advanced TSMC and Samsung nodes needed for producing high-performance training chips, and SMIC's 5nm capacity is fully allocated to Huawei. Their software is also immature compared to CUDA or CANN.

Chinese GPU startups like Cambricon, Enflame, MetaX, Iluvater, Biren, and Moore Threads gained immense momentum after H20 was banned. Cloud providers and big tech started testing and verifying their chips for procurement. H20s coming will effectively kill this momentum. Their only source of revenue will be SOE and government procurement contracts. Cloud Providers may try to keep them as a backup in case Nvidia GPUs get banned again, but the chances of large-scale procurement are very low. Huawei’s Ascend 910B will also be a big loser. The 910C with 800 Tflops and 3.2 Tb/s of memory bandwidth is good enough for training and comparable to H100 (3.2 Tb/s) at inference, but it is 20% worse than H20 at inference.

Implications for Chinese Data Center Buildout

Chinese Big Tech & cloud providers started ramping up CAPEX and bought a lot of H20s in 4Q 2024 & 1Q 2025 due to R1 release and the dawn of the RL/inference age. The April ban caused CAPEX spending to crater in Q2 and is projected to slowly recover in Q3 and beyond due to H20 ban removal.

If the ban persisted, then Data Center Buildout would have slowed down a lot in 2H 2025, as Chinese alternatives were still being tested and verified by big tech and cloud providers.

For next-generation chips with new architectures, including compute power, framework methods, and coefficient calculation solutions, many cards are still in the verification stage. It is expected that October and Q4 of this year (2025) will be critical time nodes for chip testing and verification, with more significant progress anticipated then. The unified planning and design of second-generation chips will occur after the models are released, and a relatively large supply and delivery are expected. The test and comparison results of newly warehoused chips during Q4 will directly influence overall procurement decisions for next year (2026).

A big problem for the procurement of domestic alternatives is a lack of production.

Many domestic chip manufacturers, even if they open up sales channels, if production capacity cannot keep up, then orders may not be deliverable. This is a problem many domestic manufacturers currently face. It's not that there are no orders, but rather that they dare not deliver in large quantities. This situation also exists with Cambricon. Currently, the design capabilities of chip design manufacturers are actually quite good, and many cards perform very well; they are good cards. However, the bigger problem lies in mass production, as many manufacturers previously relied too heavily on TSMC, so we should not be overly optimistic that many designed cards will necessarily achieve stable mass production.

This will be alleviated next year as SMIC continues to expand 7nm/5nm capacity and Huawei’s 7nm/5nm fabs come online. Cambricom has already started getting its chip fabbed at SMIC. Competing with H20s will be very hard due to a lack of HBM3E, but production shouldn’t be a problem for Chinese GPU manufacturers in 2026. H20s will ensure that the Data Center buildout doesn’t slow down in China.

Implications For Chinese AI Labs

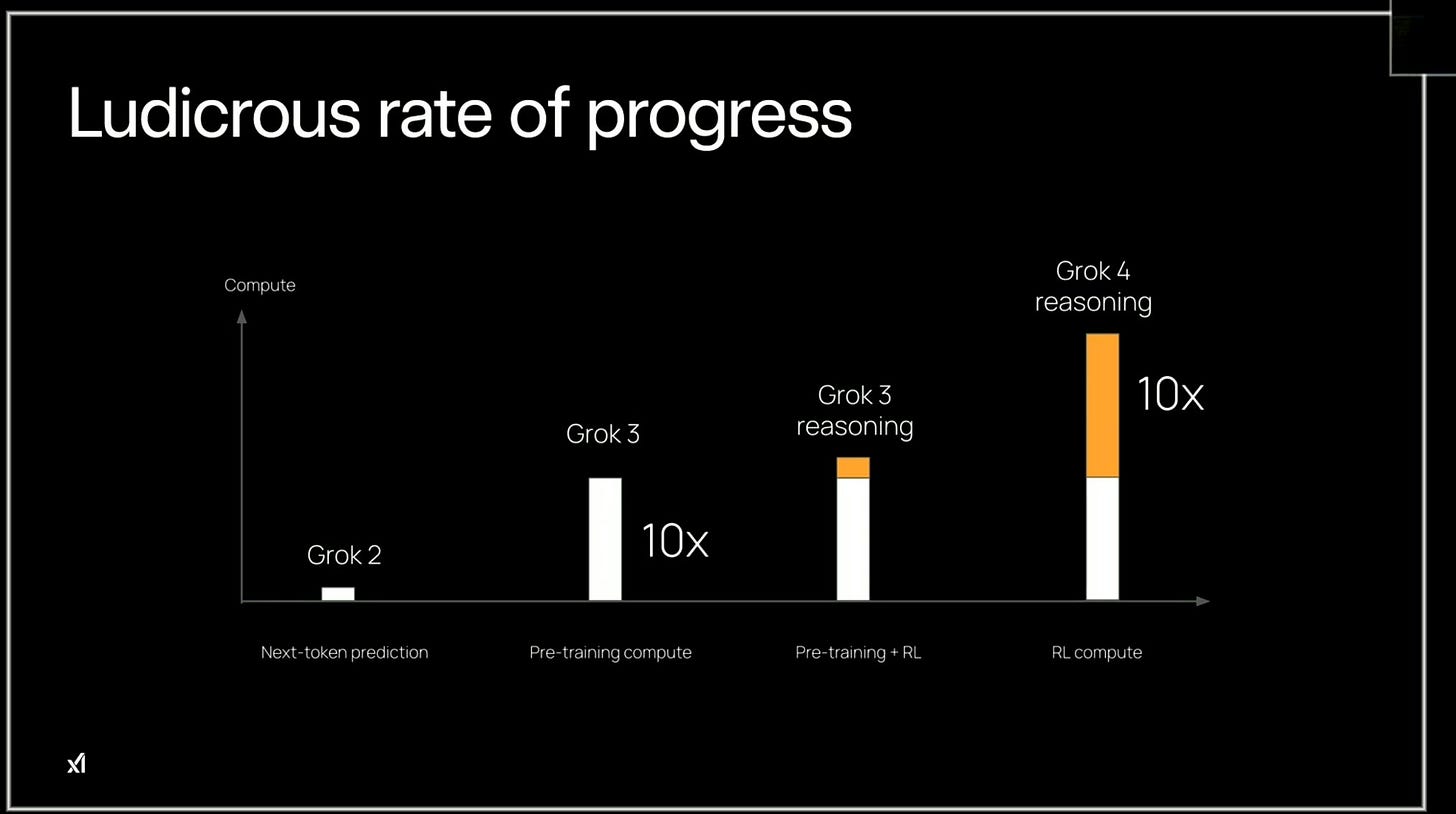

H20s will have a very positive impact on Chinese AI development. Due to the dawn of the inference/RL age, compute is shifting from training to inference. Even for training reasoning LLMs, labs are using reasoning traces generated by LLMs to be used as training data for the next gen LLM. The compute used to generate this synthetic data is rising exponentially. RL compute has already reached 50% of the total compute required to train a SoTA model like Grok 4.

H20s will solve major problems for Chinese AI labs. Serving users and the expansion of AI services were severely hampered by the lack of compute. Chinese labs serve users at a very slow output speed (20-25 tokens/s) compared to the 150-200 tokens/s output speed of American labs. Now they can expand their services and serve users at very high output speeds due to H20s. Higher output speeds will reduce the time of synthetic data generation for training next-gen LLMs. Chinese labs can compete much more easily with U.S. labs now. RL compute is consistently increasing and has now achieved a 1:1 ratio with pre-training compute for models SoTA models like Grok 4. A chip like H20 with high memory bandwidth will make Reasoning LLM training much easier for Chinese labs. The gap between SoTA Chinese and American models will likely continue to reduce in the next 18-24 months.

Implications For Nvidia

Nvidia’s revenue from China will substantially increase from now on. China will probably contribute 20%-25% to Nvidia’s topline. Nvidia will be able to maintain CUDA dominance. The biggest fear for Nvidia is the splitting of the CUDA ecosystem. They don’t want to lose the 1.5M CUDA developers present in China. Nvidia will stop the growth and momentum of Chinese GPU startups and put pressure on Huawei.

In your opinion, do you think lifting export controls will be a major problem for Chinese GPU manufacturers? I understand that they’ll lose many domestic contracts in the short-term, but will SOEs and government contracts be enough to tide them out until manufacturing capacity scales up in China?

I wonder if perhaps the biggest problem long term for PRC semicon industry becomes the lack of impetus to build an alternative ecosystem to CUDA. That seems like a huge issue for their indigenous efforts